Updated April 3 at 12:37 p.m.

University students and computer scientists have been working to reduce errors in human-robot interactions for the past several months at the University’s Humans To Robots Laboratory. A research team of undergraduate and graduate students proposed a new model that programs a robot to more effectively retrieve an object through question-asking and information processing implied from hand gestures, according to the research team’s published article “Reducing Errors in Object-Fetching Interactions through Social Feedback.” The study’s findings are important because they might streamline many tasks related to health care, home life and maintenance activities, said David Whitney GS, co-author of the study.

Fetching items is a problem social robots currently face, according to the research team’s article. The act of fetching requires a robot to interpret human language and gestures to infer what item to deliver. “If the robot could ask questions, it would help the robot be faster and more accurate in its task,” according to the article. Currently, social robots are not programmed to ask questions.

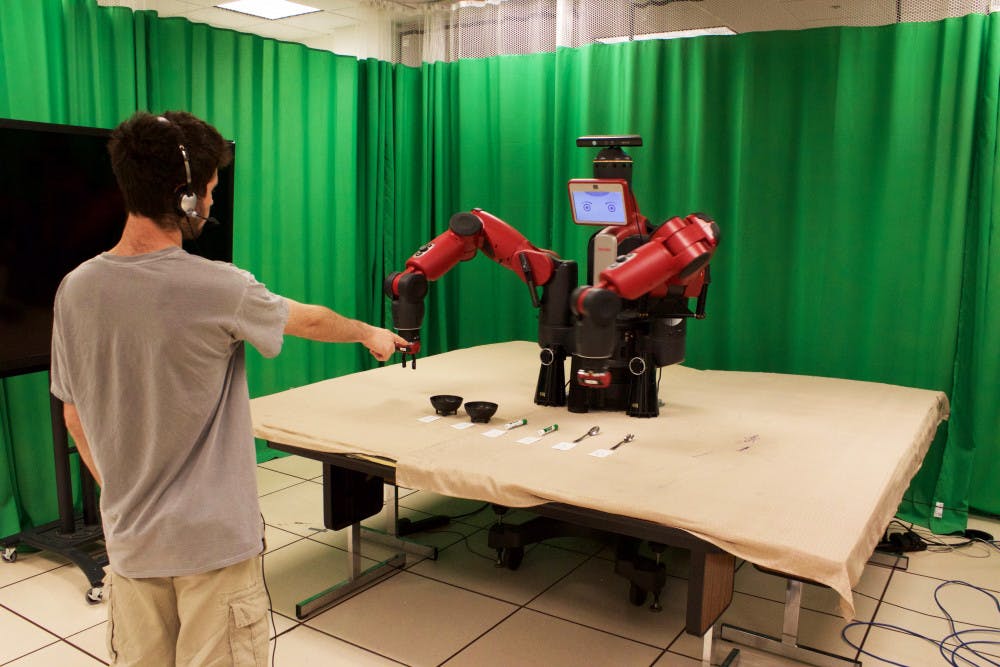

To test their program, which is based on a mathematical model of probability assigned to a group of objects, a user would stand in front of the robot with six items spread across a table directly in front of the robot. The six items are two identical plastic bowls, two identical Expo markers and two identical metal spoons. In the first set-up, the items were spread out along a large arc in front of the robot with the identical pairs placed far apart from one another, creating an unambiguous situation. In the second set-up, the items were in a line at the center of the table with identical pairs placed close to each other, making pointing a less effective method for getting the robot to distinguish items.

The researchers only wanted the robot to ask questions when it was in an ambiguous situation, such as in the second table set-up, said Eric Rosen ’18, co-author of the study. In a clear situation akin to the first table set-up, the robot should not ask a question, he said.

Overall, the researchers found that the model systems were accurate: detecting the correct item with 88.4 percent accuracy in the ambiguous table set-up and 97.9 percent in the unambiguous table set-up. The team also found that the results of the experiments confirmed its hypotheses that asking questions would improve the robots’ ability to accurately retrive items in the ambiguous set-up. In the unambiguous set up, the model was much faster without the robot asking a question, the study found.

It is important that robots ask questions when retrieving objects because “the sensing of robots is very poor,” Whitney said. They were surprised that the simple questions the robot asked “dramatically increased (the) accuracy, and people enjoy it more because it feels like a real conversation,” Whitney said.

This current technology with robots “has profound implications,” said Joyce Chai, professor in the department of computer science and engineering at Michigan State University, who was cited in the research team’s published article. “We see robots in hotel service, elderly care, … but you cannot expect humans to work with robots by using (complex) interfaces to control the robot,” she added. Since language is the most natural means by which humans interact with one another, it is important for language to exist between robots and humans, Chai said. “I think what the group has done will have important applications in the future for joint tasks,” she added.

Though the team’s research was a significant development in this area of study, robots’ communication with humans can still be further enhanced, Rosen and Whitney said. “In this particular project, we only implemented two modalities of communication: speaking and pointing gestures,” Rosen said. “But the way robots understand speaking is very basic,” he added. They currently do not understand the concepts of “left” and “right,” he said. The Humans To Robots Laboratory hopes to expand on its research so that the robot system can understand this concept of directionality and more complex syntax, Whitney said.

The team’s research will be presented this year at the International Conference on Robotics and Automation to be held from May 29 to June 3 in Singapore.